|

Supplementary code for the Build a Large Language Model From Scratch book by Sebastian Raschka Code repository: https://github.com/rasbt/LLMs-from-scratch |

|

Appendix A: Introduction to PyTorch (Part 2)#

A.9 Optimizing training performance with GPUs#

A.9.1 PyTorch computations on GPU devices#

import torch

print(torch.__version__)

---------------------------------------------------------------------------

ModuleNotFoundError Traceback (most recent call last)

Cell In[1], line 1

----> 1 import torch

3 print(torch.__version__)

ModuleNotFoundError: No module named 'torch'

print(torch.cuda.is_available())

True

tensor_1 = torch.tensor([1., 2., 3.])

tensor_2 = torch.tensor([4., 5., 6.])

print(tensor_1 + tensor_2)

tensor([5., 7., 9.])

tensor_1 = tensor_1.to("cuda")

tensor_2 = tensor_2.to("cuda")

print(tensor_1 + tensor_2)

tensor([5., 7., 9.], device='cuda:0')

tensor_1 = tensor_1.to("cpu")

print(tensor_1 + tensor_2)

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

/tmp/ipykernel_2321/2079609735.py in <cell line: 2>()

1 tensor_1 = tensor_1.to("cpu")

----> 2 print(tensor_1 + tensor_2)

RuntimeError: Expected all tensors to be on the same device, but found at least two devices, cuda:0 and cpu!

A.9.2 Single-GPU training#

X_train = torch.tensor([

[-1.2, 3.1],

[-0.9, 2.9],

[-0.5, 2.6],

[2.3, -1.1],

[2.7, -1.5]

])

y_train = torch.tensor([0, 0, 0, 1, 1])

X_test = torch.tensor([

[-0.8, 2.8],

[2.6, -1.6],

])

y_test = torch.tensor([0, 1])

from torch.utils.data import Dataset

class ToyDataset(Dataset):

def __init__(self, X, y):

self.features = X

self.labels = y

def __getitem__(self, index):

one_x = self.features[index]

one_y = self.labels[index]

return one_x, one_y

def __len__(self):

return self.labels.shape[0]

train_ds = ToyDataset(X_train, y_train)

test_ds = ToyDataset(X_test, y_test)

from torch.utils.data import DataLoader

torch.manual_seed(123)

train_loader = DataLoader(

dataset=train_ds,

batch_size=2,

shuffle=True,

num_workers=1,

drop_last=True

)

test_loader = DataLoader(

dataset=test_ds,

batch_size=2,

shuffle=False,

num_workers=1

)

class NeuralNetwork(torch.nn.Module):

def __init__(self, num_inputs, num_outputs):

super().__init__()

self.layers = torch.nn.Sequential(

# 1st hidden layer

torch.nn.Linear(num_inputs, 30),

torch.nn.ReLU(),

# 2nd hidden layer

torch.nn.Linear(30, 20),

torch.nn.ReLU(),

# output layer

torch.nn.Linear(20, num_outputs),

)

def forward(self, x):

logits = self.layers(x)

return logits

import torch.nn.functional as F

torch.manual_seed(123)

model = NeuralNetwork(num_inputs=2, num_outputs=2)

device = torch.device("cuda" if torch.cuda.is_available() else "cpu") # NEW

model = model.to(device) # NEW

optimizer = torch.optim.SGD(model.parameters(), lr=0.5)

num_epochs = 3

for epoch in range(num_epochs):

model.train()

for batch_idx, (features, labels) in enumerate(train_loader):

features, labels = features.to(device), labels.to(device) # NEW

logits = model(features)

loss = F.cross_entropy(logits, labels) # Loss function

optimizer.zero_grad()

loss.backward()

optimizer.step()

### LOGGING

print(f"Epoch: {epoch+1:03d}/{num_epochs:03d}"

f" | Batch {batch_idx:03d}/{len(train_loader):03d}"

f" | Train/Val Loss: {loss:.2f}")

model.eval()

# Optional model evaluation

Epoch: 001/003 | Batch 000/002 | Train/Val Loss: 0.75

Epoch: 001/003 | Batch 001/002 | Train/Val Loss: 0.65

Epoch: 002/003 | Batch 000/002 | Train/Val Loss: 0.44

Epoch: 002/003 | Batch 001/002 | Train/Val Loss: 0.13

Epoch: 003/003 | Batch 000/002 | Train/Val Loss: 0.03

Epoch: 003/003 | Batch 001/002 | Train/Val Loss: 0.00

def compute_accuracy(model, dataloader, device):

model = model.eval()

correct = 0.0

total_examples = 0

for idx, (features, labels) in enumerate(dataloader):

features, labels = features.to(device), labels.to(device) # New

with torch.no_grad():

logits = model(features)

predictions = torch.argmax(logits, dim=1)

compare = labels == predictions

correct += torch.sum(compare)

total_examples += len(compare)

return (correct / total_examples).item()

compute_accuracy(model, train_loader, device=device)

1.0

compute_accuracy(model, test_loader, device=device)

1.0

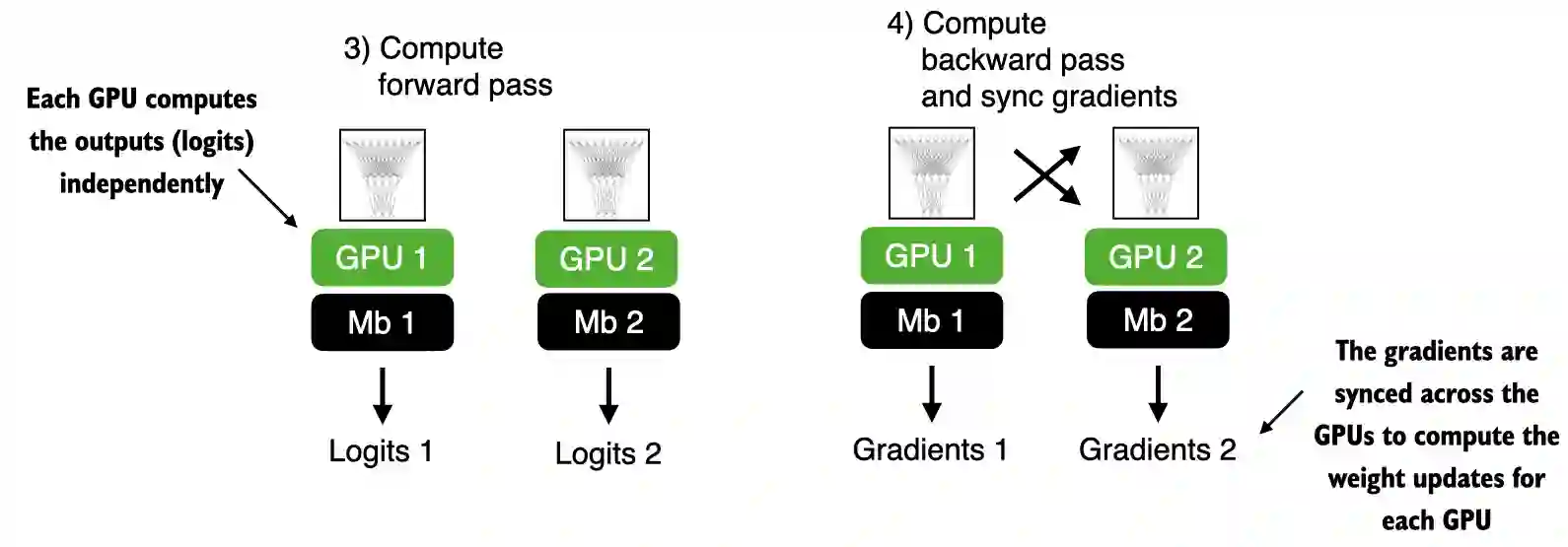

A.9.3 Training with multiple GPUs#

See DDP-script.py