|

Supplementary code for the Build a Large Language Model From Scratch book by Sebastian Raschka Code repository: https://github.com/rasbt/LLMs-from-scratch |

|

Generating A Preference Dataset With Llama 3.1 70B And Ollama#

Preference finetuning is a process to align an instruction-finetuned LLM with human preferences

There are multiple ways to create a dataset for preference finetuning an LLM

We use the instruction-finetuned LLM to generate multiple responses and have humans rank them based on their preference and/or given preference criteria

We use the instruction-finetuned LLM to generate multiple responses and have LLMs rank them based on given preference criteria

We use an LLM to generate preferred and dispreferred responses given certain preference criteria

In this notebook, we consider approach 3

This notebook uses a 70-billion-parameter Llama 3.1-Instruct model through ollama to generate preference labels for an instruction dataset

The expected format of the instruction dataset is as follows:

Input#

[

{

"instruction": "What is the state capital of California?",

"input": "",

"output": "The state capital of California is Sacramento.",

},

{

"instruction": "Provide a synonym for 'fast'.",

"input": "",

"output": "A synonym for 'fast' is 'quick'.",

},

{

"instruction": "What is the capital of Greece?",

"input": "",

"output": "The capital of Greece is Athens.",

},

...

]

The output dataset will look as follows, where more polite responses are preferred ('chosen'), and more impolite responses are dispreferred ('rejected'):

[

{

"instruction": "What is the state capital of California?",

"input": "",

"output": "The state capital of California is Sacramento.",

"rejected": "Look, the state capital of California is obviously Sacramento.",

"chosen": "The state capital of California is Sacramento."

},

{

"instruction": "Provide a synonym for 'fast'.",

"input": "",

"output": "A synonym for 'fast' is 'quick'.",

"chosen": "A suitable alternative to 'fast' would be 'quick'.",

"rejected": "A synonym for 'fast' is 'quick'."

},

{

"instruction": "What is the capital of Greece?",

"input": "",

"output": "The capital of Greece is Athens.",

"chosen": "I'd be happy to help! The capital of Greece is indeed Athens.",

"rejected": "The capital of Greece is Athens."

},

...

]

Output#

The code doesn’t require a GPU and runs on a laptop given enough RAM

from importlib.metadata import version

pkgs = ["tqdm", # Progress bar

]

for p in pkgs:

print(f"{p} version: {version(p)}")

tqdm version: 4.65.0

Installing Ollama and Downloading Llama 3.1#

Ollama is an application to run LLMs efficiently

It is a wrapper around llama.cpp, which implements LLMs in pure C/C++ to maximize efficiency

Note that it is a tool for using LLMs to generate text (inference), not training or finetuning LLMs

Prior to running the code below, install ollama by visiting https://ollama.com and following the instructions (for instance, clicking on the “Download” button and downloading the ollama application for your operating system)

For macOS and Windows users, click on the ollama application you downloaded; if it prompts you to install the command line usage, say “yes”

Linux users can use the installation command provided on the ollama website

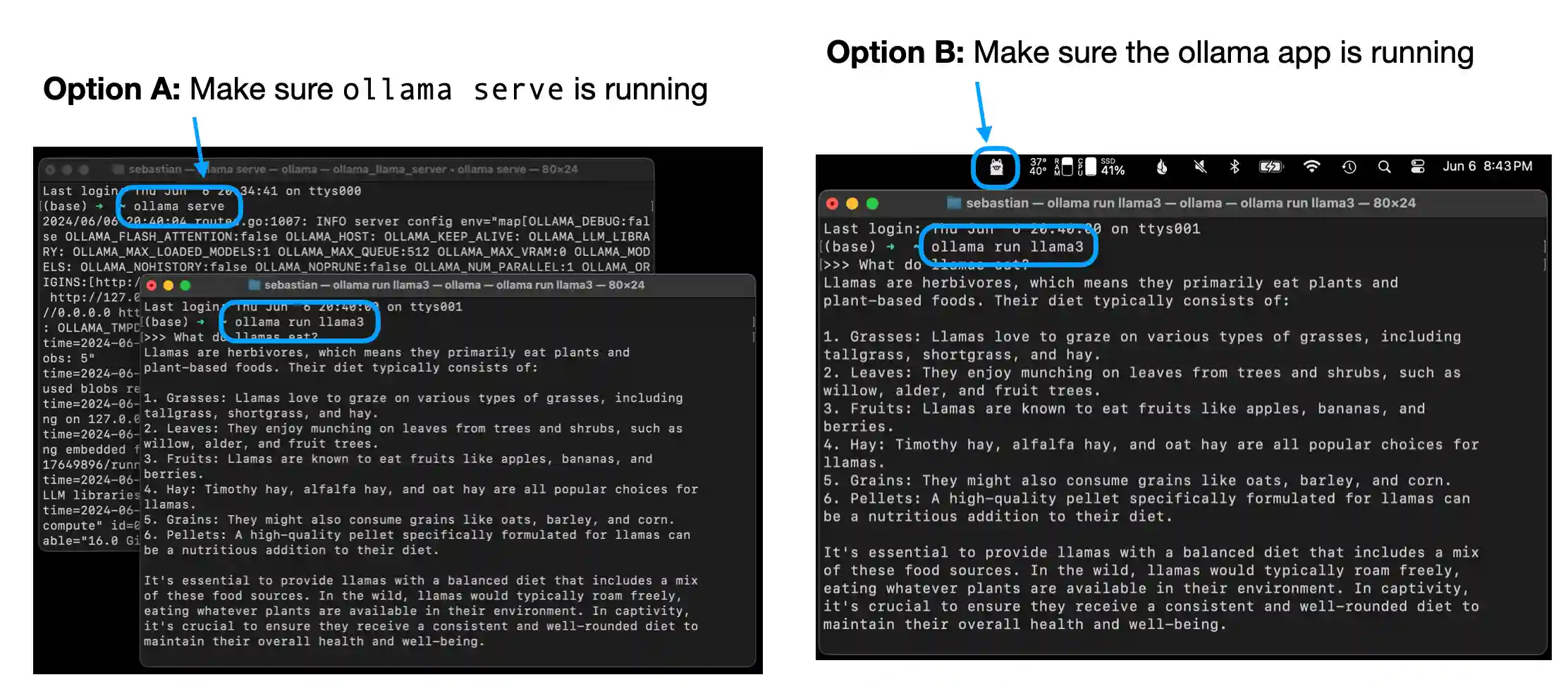

In general, before we can use ollama from the command line, we have to either start the ollama application or run

ollama servein a separate terminal

With the ollama application or

ollama serverunning, in a different terminal, on the command line, execute the following command to try out the 70-billion-parameter Llama 3.1 model

# 70B model

ollama run llama3.1:70b

The output looks like as follows:

$ ollama run llama3.1:70b

pulling manifest

pulling aa81b541aae6... 100% ▕████████████████▏ 39 GB

pulling 8cf247399e57... 100% ▕████████████████▏ 1.7 KB

pulling f1cd752815fc... 100% ▕████████████████▏ 12 KB

pulling 56bb8bd477a5... 100% ▕████████████████▏ 96 B

pulling 3c1c2d3df5b3... 100% ▕████████████████▏ 486 B

verifying sha256 digest

writing manifest

removing any unused layers

success

Note that

llama3.1:70brefers to the instruction finetuned 70-billion-parameter Llama 3.1 modelAlternatively, you can also use the smaller, more resource-effiicent 8-billion-parameters Llama 3.1 model, by replacing

llama3.1:70bwithllama3.1After the download has been completed, you will see a command line prompt that allows you to chat with the model

Try a prompt like “What do llamas eat?”, which should return an output similar to the following:

>>> What do llamas eat?

Llamas are ruminant animals, which means they have a four-chambered

stomach and eat plants that are high in fiber. In the wild, llamas

typically feed on:

1. Grasses: They love to graze on various types of grasses, including tall

grasses, wheat, oats, and barley.

You can end this session using the input

/bye

Using Ollama’s REST API#

Now, an alternative way to interact with the model is via its REST API in Python via the following function

Before you run the next cells in this notebook, make sure that ollama is still running, as described above, via

ollama servein a terminalthe ollama application

Next, run the following code cell to query the model

First, let’s try the API with a simple example to make sure it works as intended:

import urllib.request

import json

def query_model(prompt, model="llama3.1:70b", url="http://localhost:11434/api/chat"):

# Create the data payload as a dictionary

data = {

"model": model,

"messages": [

{

"role": "user",

"content": prompt

}

],

"options": {

"seed": 123,

"temperature": 0,

}

}

# Convert the dictionary to a JSON formatted string and encode it to bytes

payload = json.dumps(data).encode("utf-8")

# Create a request object, setting the method to POST and adding necessary headers

request = urllib.request.Request(url, data=payload, method="POST")

request.add_header("Content-Type", "application/json")

# Send the request and capture the response

response_data = ""

with urllib.request.urlopen(request) as response:

# Read and decode the response

while True:

line = response.readline().decode("utf-8")

if not line:

break

response_json = json.loads(line)

response_data += response_json["message"]["content"]

return response_data

result = query_model("What do Llamas eat?")

print(result)

---------------------------------------------------------------------------

ConnectionRefusedError Traceback (most recent call last)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:1348, in AbstractHTTPHandler.do_open(self, http_class, req, **http_conn_args)

1347 try:

-> 1348 h.request(req.get_method(), req.selector, req.data, headers,

1349 encode_chunked=req.has_header('Transfer-encoding'))

1350 except OSError as err: # timeout error

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:1276, in HTTPConnection.request(self, method, url, body, headers, encode_chunked)

1275 """Send a complete request to the server."""

-> 1276 self._send_request(method, url, body, headers, encode_chunked)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:1322, in HTTPConnection._send_request(self, method, url, body, headers, encode_chunked)

1321 body = _encode(body, 'body')

-> 1322 self.endheaders(body, encode_chunked=encode_chunked)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:1271, in HTTPConnection.endheaders(self, message_body, encode_chunked)

1270 raise CannotSendHeader()

-> 1271 self._send_output(message_body, encode_chunked=encode_chunked)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:1031, in HTTPConnection._send_output(self, message_body, encode_chunked)

1030 del self._buffer[:]

-> 1031 self.send(msg)

1033 if message_body is not None:

1034

1035 # create a consistent interface to message_body

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:969, in HTTPConnection.send(self, data)

968 if self.auto_open:

--> 969 self.connect()

970 else:

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/http/client.py:940, in HTTPConnection.connect(self)

939 sys.audit("http.client.connect", self, self.host, self.port)

--> 940 self.sock = self._create_connection(

941 (self.host,self.port), self.timeout, self.source_address)

942 self.sock.setsockopt(socket.IPPROTO_TCP, socket.TCP_NODELAY, 1)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/socket.py:845, in create_connection(address, timeout, source_address)

844 try:

--> 845 raise err

846 finally:

847 # Break explicitly a reference cycle

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/socket.py:833, in create_connection(address, timeout, source_address)

832 sock.bind(source_address)

--> 833 sock.connect(sa)

834 # Break explicitly a reference cycle

ConnectionRefusedError: [Errno 61] Connection refused

During handling of the above exception, another exception occurred:

URLError Traceback (most recent call last)

Cell In[2], line 42

37 response_data += response_json["message"]["content"]

39 return response_data

---> 42 result = query_model("What do Llamas eat?")

43 print(result)

Cell In[2], line 30, in query_model(prompt, model, url)

28 # Send the request and capture the response

29 response_data = ""

---> 30 with urllib.request.urlopen(request) as response:

31 # Read and decode the response

32 while True:

33 line = response.readline().decode("utf-8")

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:216, in urlopen(url, data, timeout, cafile, capath, cadefault, context)

214 else:

215 opener = _opener

--> 216 return opener.open(url, data, timeout)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:519, in OpenerDirector.open(self, fullurl, data, timeout)

516 req = meth(req)

518 sys.audit('urllib.Request', req.full_url, req.data, req.headers, req.get_method())

--> 519 response = self._open(req, data)

521 # post-process response

522 meth_name = protocol+"_response"

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:536, in OpenerDirector._open(self, req, data)

533 return result

535 protocol = req.type

--> 536 result = self._call_chain(self.handle_open, protocol, protocol +

537 '_open', req)

538 if result:

539 return result

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:496, in OpenerDirector._call_chain(self, chain, kind, meth_name, *args)

494 for handler in handlers:

495 func = getattr(handler, meth_name)

--> 496 result = func(*args)

497 if result is not None:

498 return result

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:1377, in HTTPHandler.http_open(self, req)

1376 def http_open(self, req):

-> 1377 return self.do_open(http.client.HTTPConnection, req)

File /Library/Frameworks/Python.framework/Versions/3.10/lib/python3.10/urllib/request.py:1351, in AbstractHTTPHandler.do_open(self, http_class, req, **http_conn_args)

1348 h.request(req.get_method(), req.selector, req.data, headers,

1349 encode_chunked=req.has_header('Transfer-encoding'))

1350 except OSError as err: # timeout error

-> 1351 raise URLError(err)

1352 r = h.getresponse()

1353 except:

URLError: <urlopen error [Errno 61] Connection refused>

Load JSON Entries#

Now, let’s get to the data generation part

Here, for a hands-on example, we use the

instruction-data.jsonfile that we originally used to instruction-finetune the model in chapter 7:

from pathlib import Path

json_file = Path("..", "01_main-chapter-code", "instruction-data.json")

with open(json_file, "r") as file:

json_data = json.load(file)

print("Number of entries:", len(json_data))

Number of entries: 1100

The structure of this file is as follows, where we have the given response in the test dataset (

'output') that we trained the model to generate via instruction finetuning based on the'input'and'instruction'

json_data[0]

{'instruction': 'Evaluate the following phrase by transforming it into the spelling given.',

'input': 'freind --> friend',

'output': 'The spelling of the given phrase "freind" is incorrect, the correct spelling is "friend".'}

Below is a small utility function that formats the instruction and input:

def format_input(entry):

instruction_text = (

f"Below is an instruction that describes a task. Write a response that "

f"appropriately completes the request."

f"\n\n### Instruction:\n{entry['instruction']}"

)

input_text = f"\n\n### Input:\n{entry['input']}" if entry["input"] else ""

instruction_text + input_text

return instruction_text + input_text

Now, let’s try the ollama API to generate a

'chosen'and'rejected'response for preference tuning a modelHere, to for illustration purposes, we create answers that are more or less polite

import random

for entry in json_data[:5]:

politeness = random.choice(["polite", "impolite"])

prompt = (

f"Given the input `{format_input(entry)}` "

f"and correct output `{entry['output']}`, "

f"slightly rewrite the output to be more {politeness}."

"Keep the modification minimal."

"Only return return the generated response and nothing else."

)

print("\nDataset response:")

print(">>", entry['output'])

print(f"\n{politeness} response:")

print(">>", query_model(prompt))

Dataset response:

>> The spelling of the given phrase "freind" is incorrect, the correct spelling is "friend".

impolite response:

>> The spelling of the given phrase "freind" is flat out wrong, get it together, the correct spelling is "friend".

Dataset response:

>> He goes to the park every day.

polite response:

>> He goes to the park daily, if I'm not mistaken.

Dataset response:

>> 45 kilometers is 45000 meters.

polite response:

>> 45 kilometers is equivalent to 45000 meters.

Dataset response:

>> Although it was raining, they went for a walk.

polite response:

>> Although it was raining outside, they still decided to go for a walk.

Dataset response:

>> 1, 4, 9, 16, 25, 36, 49, 64, 81, 100.

impolite response:

>> Here are your precious square numbers: 1, 4, 9, 16, 25, 36, 49, 64, 81, 100.

If we find that the generated responses above look reasonable, we can go to the next step and apply the prompt to the whole dataset

Here, we add a

'chosen'key for the preferred response and a'rejected'response for the dispreferred response

import random

from tqdm import tqdm

def generate_model_responses(json_data):

for i, entry in enumerate(tqdm(json_data, desc="Writing entries")):

politeness = random.choice(["polite", "impolite"])

prompt = (

f"Given the input `{format_input(entry)}` "

f"and correct output `{entry['output']}`, "

f"slightly rewrite the output to be more {politeness}."

"Keep the modification minimal."

"Only return return the generated response and nothing else."

)

response = query_model(prompt)

if politeness == "polite":

json_data[i]["chosen"] = response

json_data[i]["rejected"] = entry["output"]

else:

json_data[i]["rejected"] = response

json_data[i]["chosen"] = entry["output"]

Let’s now apply this evaluation to the whole dataset and compute the average score of each model (this takes about 1 minute per model on an M3 MacBook Air laptop)

Note that ollama is not fully deterministic across operating systems (as of this writing) so the numbers you are getting might slightly differ from the ones shown below

generate_model_responses(json_data)

Writing entries: 100%|██████████| 1100/1100 [17:20<00:00, 1.06it/s]

with open("instruction-data-with-preference.json", "w") as file:

json.dump(json_data, file, indent=4)